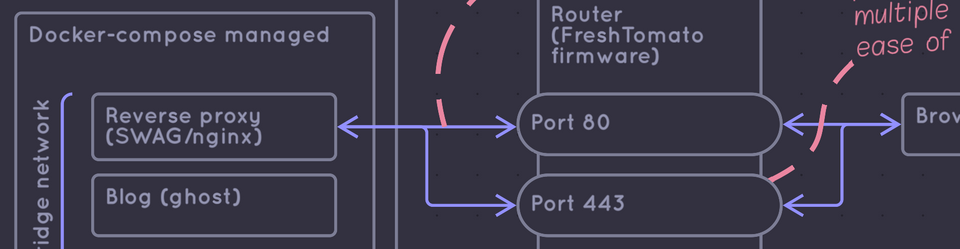

A combination of moving, neglect, and time left my home-server-and-network-attached storage (NAS) in a non-functional state. I've fixed that now (you can see the end result in the diagram below, as well as at that the bottom of the post), but let's catch up on how I got here.

About three years ago I set up a free-tier instance in Oracle's public cloud and proceeded to install a bunch of self-hosted applications on it. Some of this software was vended as docker containers, some as npm modules with external dependencies, and some required a LAMP stack or a python env. After a little bit of experimentation, I decided to limit my search to containerized (or easily containerized) software; maintaining multiple, often colliding python/apache runtimes wasn't worth it.

At about this time, I stumbled on linuxserver.io. They vend a huge array of docker containers, including SWAG, a highly configurable reverse proxy. I set up SWAG, registered a domain name, and initially made the applications on that instance accessible through path mapping. This turned to be a misery, more on that later.

Shortly after, I realized that while a lot of this software could run on a cloud instance, I needed to run some of it locally. For instance, my media library was now larger than a free tier instance's block storage drive. So I grimaced, set up port forwarding, and used nginx to suture together my NAS' applications and my cloud instance's applications under the same domain name. This catches us up to when I moved - and changed ISPs - and thus broke pretty much the whole setup.

At this point - about a week ago - I decided to resuscitate the NAS so I could get this blog and my self-hosted email server back up. Facing the pile of iterative mistakes from before, I set out with a few goals:

- All applications should run on the NAS. This has serious maintainability advantages and means that my SSL termination occurs at the correct place. While I should eventually set up SSL certs for the services on my LAN that are behind nginx, my old setup terminated SSL on the cloud instance. The calls the cloud instance made to the NAS on my home LAN were unencrypted in transit, and traversed many servers I don't own.

- All applications should be described as a docker compose stack. The experience of administering stacks is great, they scale up in complexity well, they can describe dependency relationships, and you don't lose critical parts of their setup (e.g., environment variables) if the container is destroyed. Docker-autocompose helped out immensely here.

- All applications should be available through subdomain mapping, not path mapping. Path mapping breaks spectacularly if application developers don't anticipate it, and fixing it requires nginx rewriting asset and API links using regex. No thanks. As an added benefit, password managers work better with subdomain mapping.

This was a lot of work, but it more or less went as planned. Except, remember how I changed ISPs when I moved? Yeah. Turns out my new ISP:

- Blocks all ingress and egress on port 25 (SMTP), breaking my mail server's ability to receive mail. I worked around this by setting up a Forward Email account, setting their mailserver as the server in my DNS' MX record, and having them forward mail to me on a non-standard port.

- Won't provide a static IP address, and rotates it semi-frequently. I bypassed this by configuring my router for Dynamic_DNS and changing DNS providers to one that supports updates via DDNS. Shoutout to FreeDNS, who have a blazingly fast website straight out of the early 90s, and are doing something really cool with communal vanity domain names.

And that catches us up - to a home server with functional DNS, a reverse proxy with SSL termination, a consistent containerization and config strategy, and a mail server that scores 10/10 on mail tester. Whewh.